Guidelines for Secure AI System Development set the foundation for safe, reliable tech. Learn the steps for robust AI solutions. Ditching proper security protocols when developing AI? That’s like dancing in a minefield blindfolded. I’ve seen too many AI projects crumble due to lax security practices – the aftermath isn’t pretty. Secure your AI system with end-to-end encryption and regular security audits without compromise.

The development of Artificial Intelligence (AI) systems is a complex process that involves various stages, each with its own set of security considerations.

Recent guidelines issued by prominent cybersecurity organizations, including the U.S. Cybersecurity and Infrastructure Security Agency (CISA), the UK National Cyber Security Centre (NCSC), and other international partners, aim to provide a comprehensive framework for securing AI systems throughout their lifecycle.

These guidelines are crucial in addressing the unique security vulnerabilities associated with AI technologies and ensuring the development of safe, secure, and trustworthy AI systems.

Stage 1: Secure Design

The initial stage of AI system development emphasizes the importance of secure design, which begins with employee security training and an AI risk assessment. This stage involves identifying and evaluating risks specific to the AI system being developed, beyond general risk assessments. It’s essential to ensure that vendors are trusted and adhere to security standards, as their involvement can significantly impact the system’s security.

Stage 2: Secure Development

During the secure development stage, technical controls are implemented within the AI system. These controls include configuring system logs and controls to protect the confidentiality, integrity, and availability of logs, documenting data used for training and tuning the AI model, and maintaining a baseline version of the system for potential rollback in case of compromise.

Stage 3: Secure Deployment

Before deploying the AI system, it’s crucial to segregate environments within the organization to mitigate the impact of potential compromises. This stage also involves testing system software updates before deployment, configuring AI systems to automatically install updates, and documenting lessons learned to improve future projects.

Stage 4: Secure Operation and Maintenance

Once the AI system is operational, continuous monitoring is necessary to evaluate system performance and quickly identify any compromises. Proper access controls should be in place, including specific controls for AI systems to prevent unauthorized access and maintain the integrity of the AI model. The organization’s incident response team should also be trained on AI-specific issues.

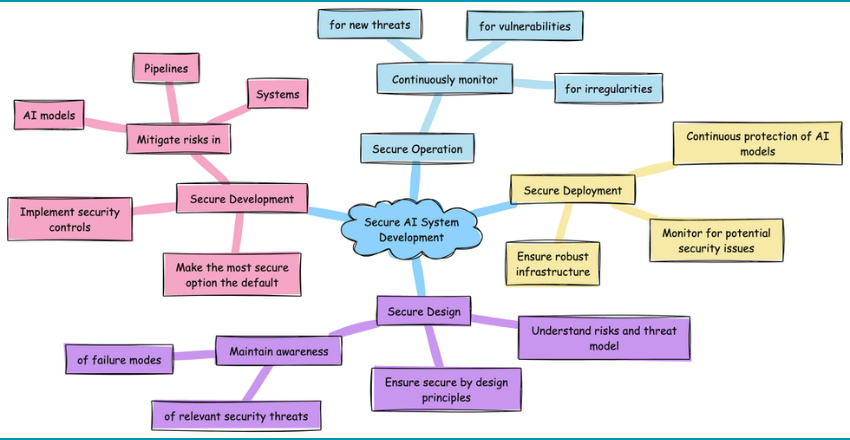

The key recommendations for secure AI system development

- Secure Design: Understand risks and threat model, ensure secure by design principles, and maintain awareness of relevant security threats and failure modes.

- Secure Development: Implement security controls and mitigations within AI models, pipelines, and systems, and make the most secure option the default.

- Secure Deployment: Ensure robust infrastructure, continuous protection of AI models, and monitor for potential security issues.

- Secure Operation: Continuously monitor AI systems to detect and address new threats, vulnerabilities, or irregularities.

Providers of AI systems can implement security controls by design and default

- Integrating Security: Incorporate security into the AI development process from the outset, including threat modeling, code reviews, and ongoing security testing.

- Model Security: Ensure robust encryption for data at rest and in transit, and implement strict access controls.

- Explainability: Develop clear ethical guidelines to address societal and ethical implications of AI, and maintain detailed documentation of AI model architectures, data sources, and training procedures.

- Secure Development Lifecycle: Embed security into the AI development process from the start.

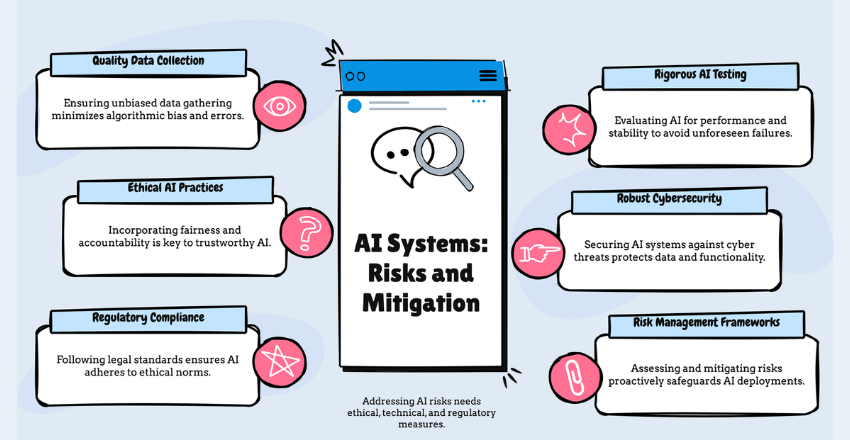

The risks associated with ai systems and how can they be mitigated

The risks associated with AI systems can be broadly categorized into three main areas: the emerging AI race, organizational risks, and the rise of rogue AIs. These risks encompass concerns such as algorithmic bias, privacy breaches, autonomous weapon systems, and the displacement of human labor.

To mitigate these risks, it’s crucial to incorporate ethical considerations, rigorous testing, and robust oversight measures in AI development and deployment.

Some key strategies for mitigating AI risks include:

- Data quality assurance and validation: Ensuring accurate and unbiased data collection and preprocessing is critical for minimizing data-related risks.

- Rigorous testing and model validation: Thoroughly evaluating AI models for performance and stability is essential to mitigate model-related risks.

- Adherence to ethical guidelines and principles: Incorporating fairness, transparency, and accountability in AI decision-making is crucial to address ethical risks.

- Implementing cybersecurity measures: Protecting AI systems from cyber threats and unauthorized access is vital to mitigate security risks.

- Compliance with legal and regulatory requirements: Adhering to data protection regulations and ethical standards is necessary to mitigate legal and regulatory risks.

- Adoption of risk management practices: Developing comprehensive risk management frameworks and protocols can help organizations identify, assess, and mitigate risks associated with AI technologies.

Additionally, it’s essential to stay informed about the latest AI risks and best practices for risk mitigation. This includes understanding the types of risks, their drivers, and interdependencies, and engaging in ongoing dialogue among technologists, legal experts, ethicists, and other stakeholders to strike a balance between fostering innovation and protecting individuals and society from potential harm

Best practices for ensuring the security of AI systems

- Security Awareness: Invest in ongoing training and awareness programs to educate teams about the latest security threats and best practices.

- Regulatory Compliance: Stay informed about and comply with relevant regulations, such as GDPR, HIPAA, and industry-specific standards.

- Incident Communication Plan: Develop a clear and effective communication plan for notifying stakeholders, customers, and regulators in the event of a security breach involving AI systems.

- Secure Model Versioning and Rollback: Maintain a version history of AI models, allowing for quick rollbacks in case of model compromises or failures.

- Red Team Testing: Conduct red team exercises to simulate real-world attacks on AI systems and identify vulnerabilities.

- AI Ethical Review Board: Establish an AI ethics review board to assess the ethical implications of AI projects and ensure alignment with ethical AI principles.

These guidelines and best practices aim to ensure the development of AI systems that are not only innovative and effective but also secure and trustworthy.

Additional Recommendations

The guidelines also recommend participating in information-sharing communities to collaborate and share best practices, maintaining open lines of communication for system security feedback, and quickly mitigating and remediating issues as they arise.

Providers of AI components are advised to implement security controls by design and default within their ML models, pipelines, and systems, to protect against vulnerabilities and adversarial machine learning (AML) attacks.

Wrapping up

The Guidelines for Secure AI System Development provide a structured approach to addressing the security challenges associated with AI technologies. By following these guidelines, developers, providers, and system owners can ensure the development of AI systems that are not only innovative and effective but also secure and trustworthy.

This proactive approach to AI security is essential in leveraging the benefits of AI technologies while mitigating potential risks and vulnerabilities.

FAQ

What are the first steps to ensuring security in AI system development?

A: The foundation for secure AI begins with a clear understanding of potential vulnerabilities. Start by conducting a thorough risk assessment tailored to the AI system in question. This involves identifying sensitive data, potential threats, and areas of weakness.

Implementing a secure coding practice is crucial. This means adopting coding standards that prioritize security, such as input validation, to prevent injection attacks and data breaches. Additionally, always encrypt sensitive data, both at rest and in transit, to protect against unauthorized access.

How can developers integrate privacy into AI systems from the beginning?

A: Privacy by Design (PbD) is a key principle that should be integrated into the development process of AI systems. This approach involves incorporating privacy controls and considerations into the system architecture from the start, rather than as an afterthought.

One practical step is to anonymize datasets used in training AI to minimize the risk of personal data exposure. Furthermore, ensure that the AI system is designed to collect only the data that is absolutely necessary for its function, applying the principle of data minimization. Regular privacy impact assessments can help identify any potential issues as the system evolves.

What role does continuous monitoring play in maintaining the security of AI systems?

A: Continuous monitoring is critical in the dynamic landscape of AI systems. This involves regular scans for vulnerabilities, the application of patches, and updates to AI models and the software they rely on.

One practical approach is to implement automated security tools that can detect anomalies in system behavior, which may indicate a security threat. Additionally, it’s important to maintain an up-to-date inventory of all components used in your AI system, including third-party libraries, to ensure that all elements remain secure over time.

Embracing a culture of continuous learning and improvement among the development team is also essential to stay ahead of emerging security threats.

External Resources

https://www.ic3.gov/Media/News/2023/231128.pdf

https://www.techrepublic.com/article/new-ai-security-guidelines/

https://nsfocusglobal.com/interpretation-of-guidelines-for-secure-ai-system-development/

https://www.splunk.com/en_us/blog/learn/secure-ai-system-development.html

https://ai-watch.ec.europa.eu/topics/ai-standards_en

https://aistandardshub.org/resource/main-training-page-example/1-what-are-standards/

https://owasp.org/www-project-ai-security-and-privacy-guide/

https://www.iso.org/sectors/it-technologies/ai

https://www.ncsc.gov.uk/collection/guidelines-secure-ai-system-development

https://www.ncsc.gov.uk/collection/guidelines-secure-ai-system-development/guidelines/secure-design

https://www.linkedin.com/pulse/best-practices-ensuring-security-ai-systems-srinivas-yenuganti-rcgsc