AI software testing is not just a trend, it’s a necessity for quality assurance. Despite this, some teams resist, fearing complexity and high costs. Through targeted training and smart tool choices, we’ve made AI testing accessible and efficient for even small teams.

Artificial Intelligence (AI) is transforming the software testing landscape, offering faster, more accurate, and more reliable testing processes.

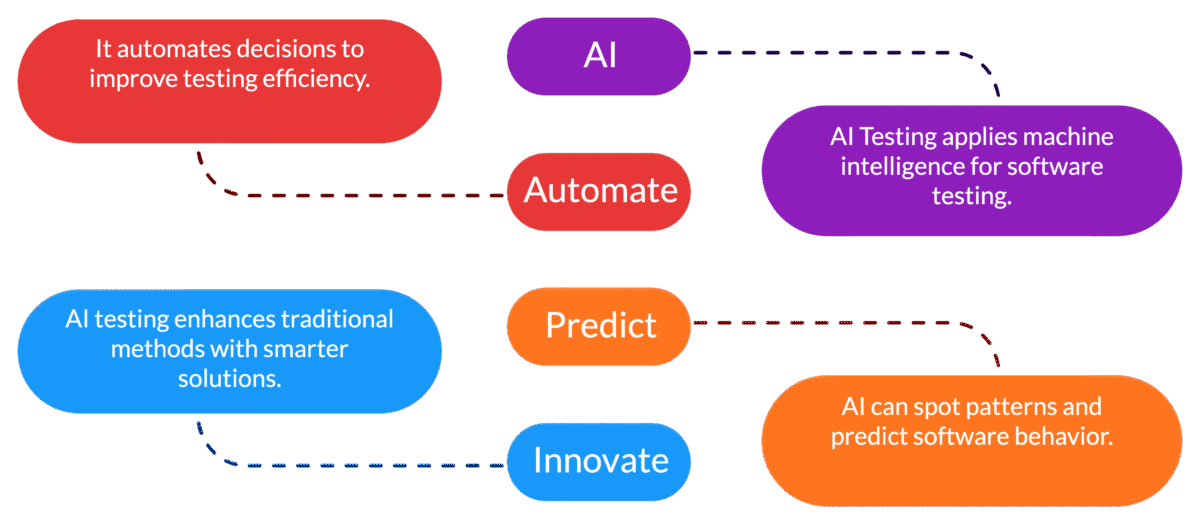

What is AI Testing?

AI Testing isn’t a new “buzzword.” It’s simply software testing that uses machine intelligence to automate decisions, spot patterns, and predict outcomes better than rules-based scripts ever could.

Think of it this way, manual testing is riding a bicycle. AI testing is being chauffeured in a self-driving Tesla while it diagnoses the engine mid-drive, fixes it on the fly, and reroutes traffic before a jam even happens.

It’s still testing. It just doesn’t rely on the old, dumb ways.

Why Perform AI Testing?

Because traditional testing is broken. Period.

It doesn’t scale.

It breaks with every release.

It’s expensive—human resource expensive.

It can’t anticipate bugs it wasn’t told to look for.

AI flips the script. It learns. It adapts. It predicts. And more importantly, it evolves, every single run.

If you’re releasing faster than your QA team can blink, this isn’t a “nice to have”, it’s mandatory for survival.

Challenges in Traditional Test Automation

Static scripts aren’t smart.

You tweak the user interface, and your entire test suite collapses like a house of cards. Every button relabeled, every field moved, every ID changed, your scripts don’t know what to do anymore. Suddenly, you’re not testing the product, you’re babysitting your test framework. That’s not leverage. That’s a liability.

Maintenance becomes the bottleneck.

The more test coverage you try to add, the more brittle your system becomes. Every additional line of code increases the chance of false positives, failed test runs, and wasted dev hours. You end up needing more people to manage more scripts, which kills the whole reason you tried to automate in the first place. Instead of freeing up time, you’ve just shifted the burden.

Feedback loops drag.

Every second your QA team spends waiting on results is a second your engineers aren’t shipping. And if that delay means bugs slip through or features stall, it’s not just annoying,it’s expensive. You’re burning both time and trust. Customers don’t care why a bug happened. They care that it did happen. And they remember.

Traditional automation is reactive, not predictive.

It only catches what you tell it to look for. If it hasn’t been pre-programmed, it doesn’t exist to the system. This creates blind spots that manual testers might catch, but automation will miss,until it’s too late.

Here’s the problem

Legacy automation is like patching holes in a sinking ship. It’s a constant game of catch-up. You’re fixing what breaks, but never building something that can prevent it in the first place. That’s not sustainable. It’s not scalable. And it’s not how teams win in fast-moving, high-stakes environments.

When you switch from fragile, reactive automation to AI-driven testing, you move from patching leaks to engineering a self-repairing vessel. You stop playing defense and start scaling with confidence. Your feedback loops tighten. Your test coverage gets smarter, not heavier.

And your team stops firefighting and starts building again. You’re not just fixing tests. You’re reclaiming your time, your budget, and your competitive edge. Old-school testing is fragile. It’s reactive. It’s like patching a leaking ship instead of building a better vessel.

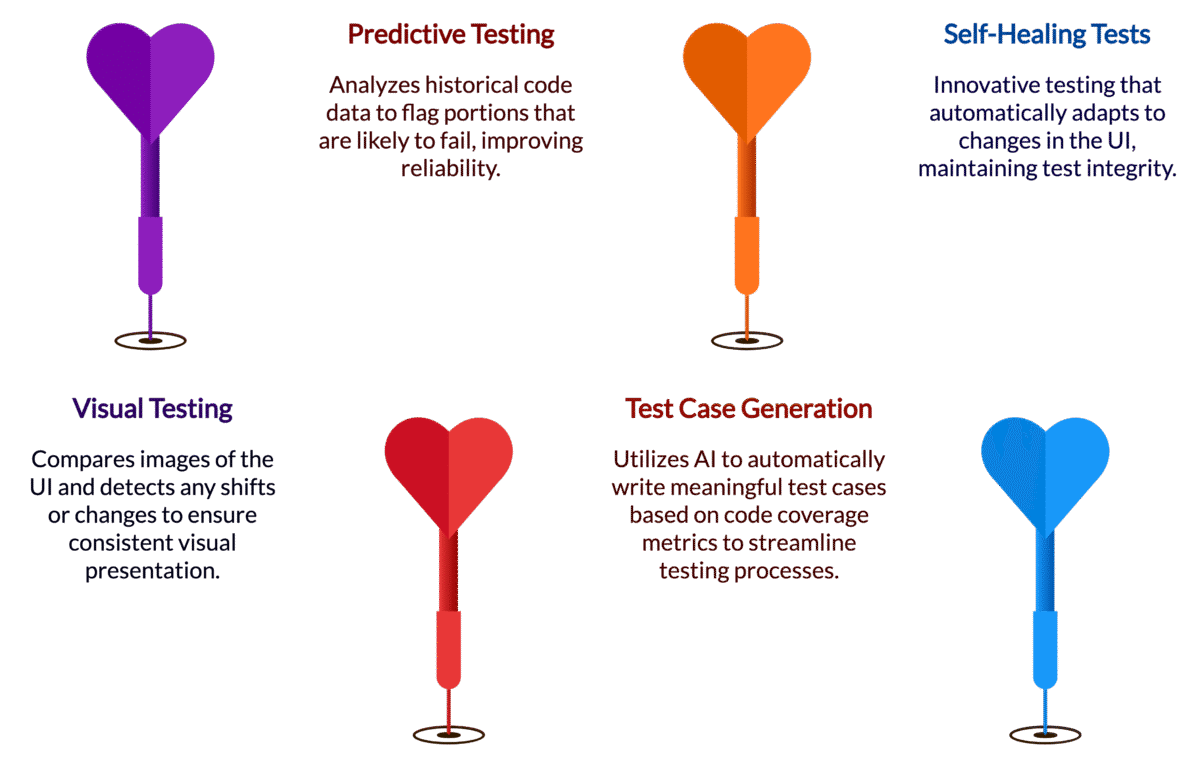

Types of AI Testing

Visual Testing: Compares images and detects UI shifts.

Predictive Testing: Flags code likely to fail based on history.

Test Case Generation: AI writes test cases based on code coverage.

Self-Healing Tests: Tests that adapt when the UI changes.

You don’t need more testers. You need smarter tests.

How to Perform AI Testing

Here’s how it works:

Feed the model data. Logs, past failures, UI maps.

Let it analyze. Pattern recognition kicks in.

Generate tests or augment existing ones.

Deploy, monitor, improve. It gets better with use.

The heavy lifting isn’t the code, it’s the data. The clearer the signal, the more accurate the test intelligence.

AI Strategies for Software Testing

Start with high-impact areas. Test where failure costs most.

Use AI to complement, not replace. Think augmentation, not automation.

Feed it change logs, version histories, bug reports. This is the new QA fuel.

Prioritize test coverage dynamically. Let AI decide where the risk is, not your gut.

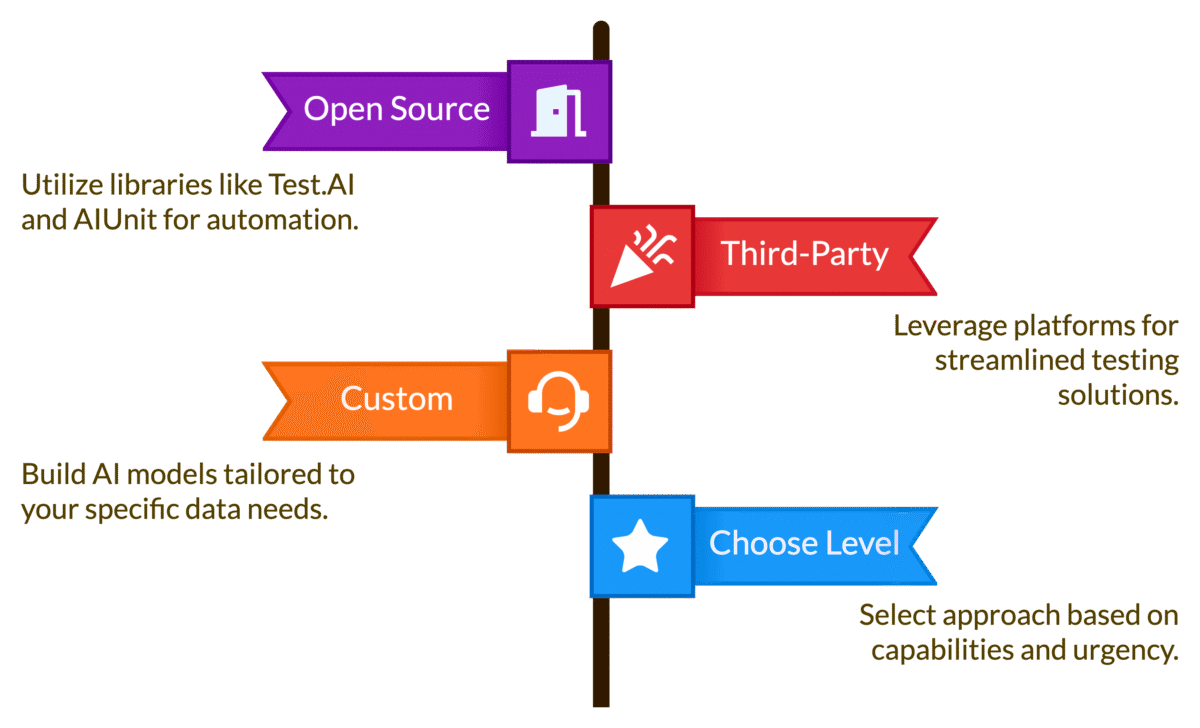

Methods to Implement AI in Testing

Use Open Source Libraries like Test.AI or AIUnit.

Leverage third-party platforms (see tools below).

You choose the level based on your internal capabilities and urgency of output.

Building Custom AI Functionality for Testing (From Scratch)

You don’t build AI because it’s cool.

You build AI because your environment is so unique that off-the-shelf dies on contact.

Step-by-step:

Collect datasets from every past bug, release, regression.

Train a model to find correlation between code changes and test failures.

Use that to flag risk, generate tests, and auto-fix flaky ones.

Custom AI is a weapon. But only if you have the data depth to wield it.

Leveraging Proprietary AI Testing Tools

If you want results now, buy speed. These platforms have done the hard work. Plug, play, profit.

Top AI Testing Tools

BrowserStack

Live, automated, and visual regression with AI-insights baked in.

Sahi Pro

Smart object identification. Minimal script maintenance.

Diffblue Cover

Java unit test generation with machine learning.

EvoSuite

Automatically generates test suites with high coverage.

Mabl

Self-healing tests with smart dashboards and data integrations.

They aren’t all equal, but they all beat the manual grind.

AI Testing Tool vs. Manual Testing Tool

| AI Testing Tool | Manual Testing Tool | |

|---|---|---|

| Speed | Fast + gets faster over time | Slow and consistent |

| Scalability | Infinite with data | Limited by humans |

| Maintenance | Self-healing | Manual rework |

| Insight | Predictive + proactive | Reactive + after-the-fact |

| Cost | Higher upfront, cheaper long-term | Lower upfront, expensive overtime |

The #1 AI-Native Test Automation Platform

The crown goes to the platform that is built on AI, not patched with it.

Natively intelligent.

Learns from every run.

Requires zero scripting to scale.

Test everything. Deploy faster. Ship with confidence.

That’s not just testing. That’s evolution.

Unlock the Future of Software Testing with AI

This isn’t about replacing testers. It’s about freeing them. AI doesn’t remove QA, it removes the reasons QA is constantly fighting fires.

Let the machines handle the busywork. Your humans deserve better problems to solve.

Democratizing Quality: Test Automation for Everyone

The gatekeepers are gone. You don’t need a 10-person QA team.

You need:

A tool.

A loop.

A strategy.

Now everyone can test. Now quality is a team sport.

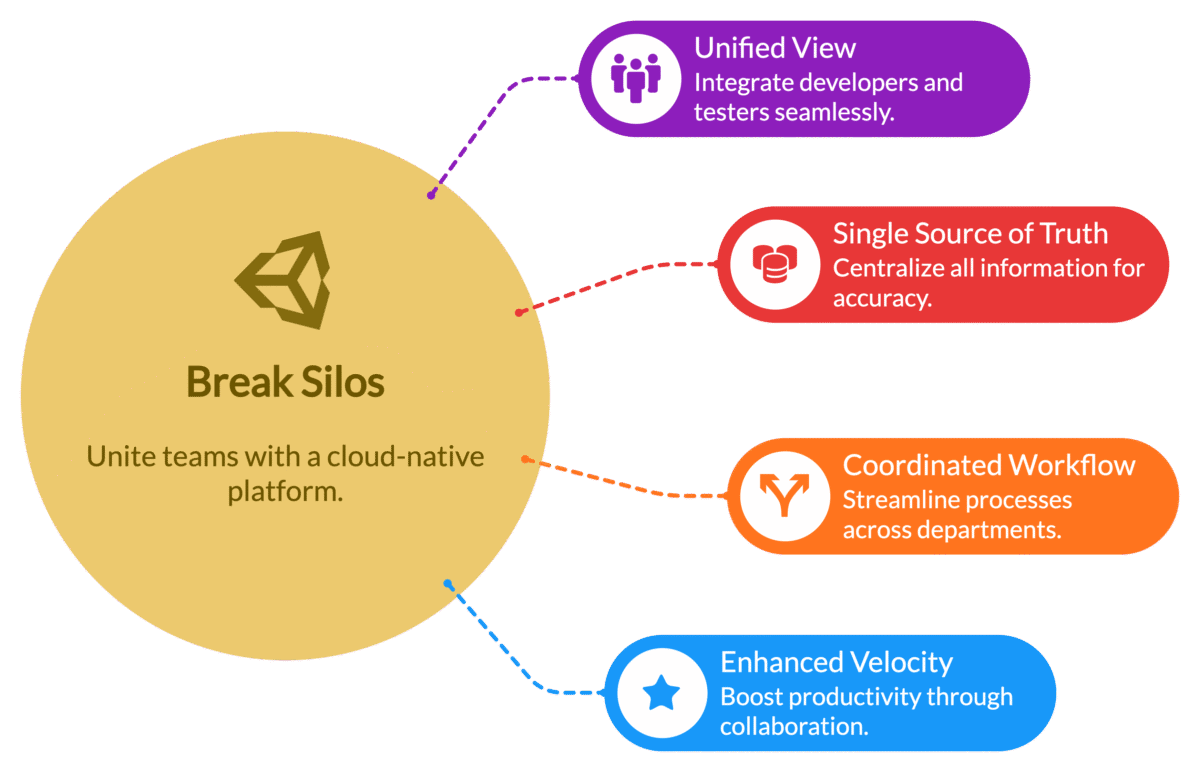

Break Silos with a Unified, Cloud-Native Platform

Developers. Testers. Product. All in one view.

One version of truth.

One workflow.

No silos.

Velocity isn’t just speed. It’s coordination.

Explore the Latest in Test Automation and Software Quality

Want to stay relevant? Stay curious.

The AI Software Testing space is moving fast.

Low-code testing.

AI test bots.

Predictive QA.

If you’re testing like it’s 2015, you’ve already lost.

The Real Cost of Open Source in Test Automation

Free isn’t free.

You pay in time.

You pay in maintenance.

You pay in failure.

Unless you have the in-house talent to fork and maintain… you’re going to fall behind. Speed isn’t optional. And open-source usually can’t keep up.

Unlocking Intent: The Next Generation of Test Automation

The biggest leap? Tests that understand intent. They don’t just check if the UI exists. They check if it means what it’s supposed to. They validate purpose, not just presence. That’s what AI brings to testing, interpretation. The end of “pass/fail” and the beginning of understanding.

If you’re still running tests the old way, you’re not testing. You’re guessing. And AI doesn’t guess. It knows.