Developers often assume that AI LLMs generate perfectly secure code. In reality, AI-generated code can introduce subtle flaws that go unchecked, leaving your application vulnerable. Instead, partner AI’s speed with detailed code reviews and continuous testing. It’s a foolproof combo for secure software.

Artificial Intelligence (AI) language models, especially Large Language Models (LLMs), have become powerful tools for developers. They can assist in code generation, debugging, and even security analysis. However, integrating AI into software development requires caution to ensure that the code remains secure.

Why Secure Coding Matters in AI-Generated Code

AI LLMs like OpenAI’s GPT-4 and Google Gemini have revolutionized the way developers write code, offering rapid generation of code snippets based on specific prompts. While these tools can dramatically accelerate development, they also introduce significant security risks. Understanding these risks is essential to maintaining secure coding practices when using AI-generated code.

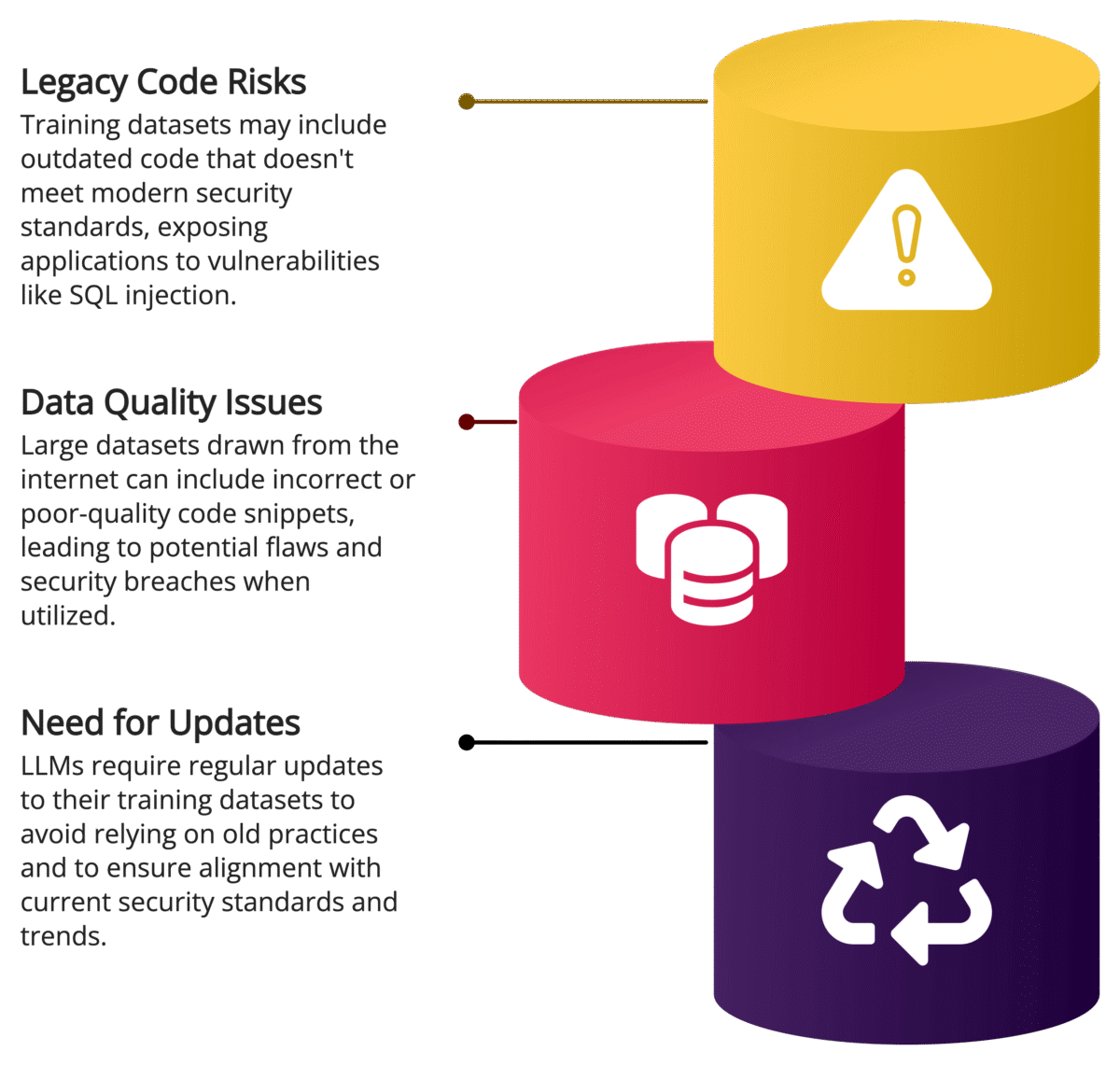

1. Training Data Limitations

LLMs are trained on extensive datasets sourced from publicly available code repositories, forums, documentation, and other internet content. While this broad training base enables LLMs to generate diverse code snippets, it also presents several security concerns:

Legacy Code: Training datasets often contain outdated code that may not adhere to current security standards. Code that was once considered best practice might now be vulnerable to known exploits, such as SQL injection or cross-site scripting (XSS).

Vulnerable Code Patterns: Since LLMs learn from publicly available data, they can inadvertently reproduce vulnerable code patterns. For example, if an AI model was trained on old PHP code that uses

mysql_query()without parameterized queries, it may generate similar insecure code.Malicious Code Fragments: Some training data may contain malicious or poorly designed code. The AI could unknowingly incorporate these patterns into generated outputs, leading to security vulnerabilities if developers are not vigilant in reviewing the code.

Example:

An AI-generated Python code snippet that constructs SQL queries using string concatenation:

def get_user_data(user_id):

query = "SELECT * FROM users WHERE id = " + user_id

cursor.execute(query)This is a classic example of a SQL injection vulnerability. Secure code would use parameterized queries instead:

def get_user_data(user_id):

query = "SELECT * FROM users WHERE id = %s"

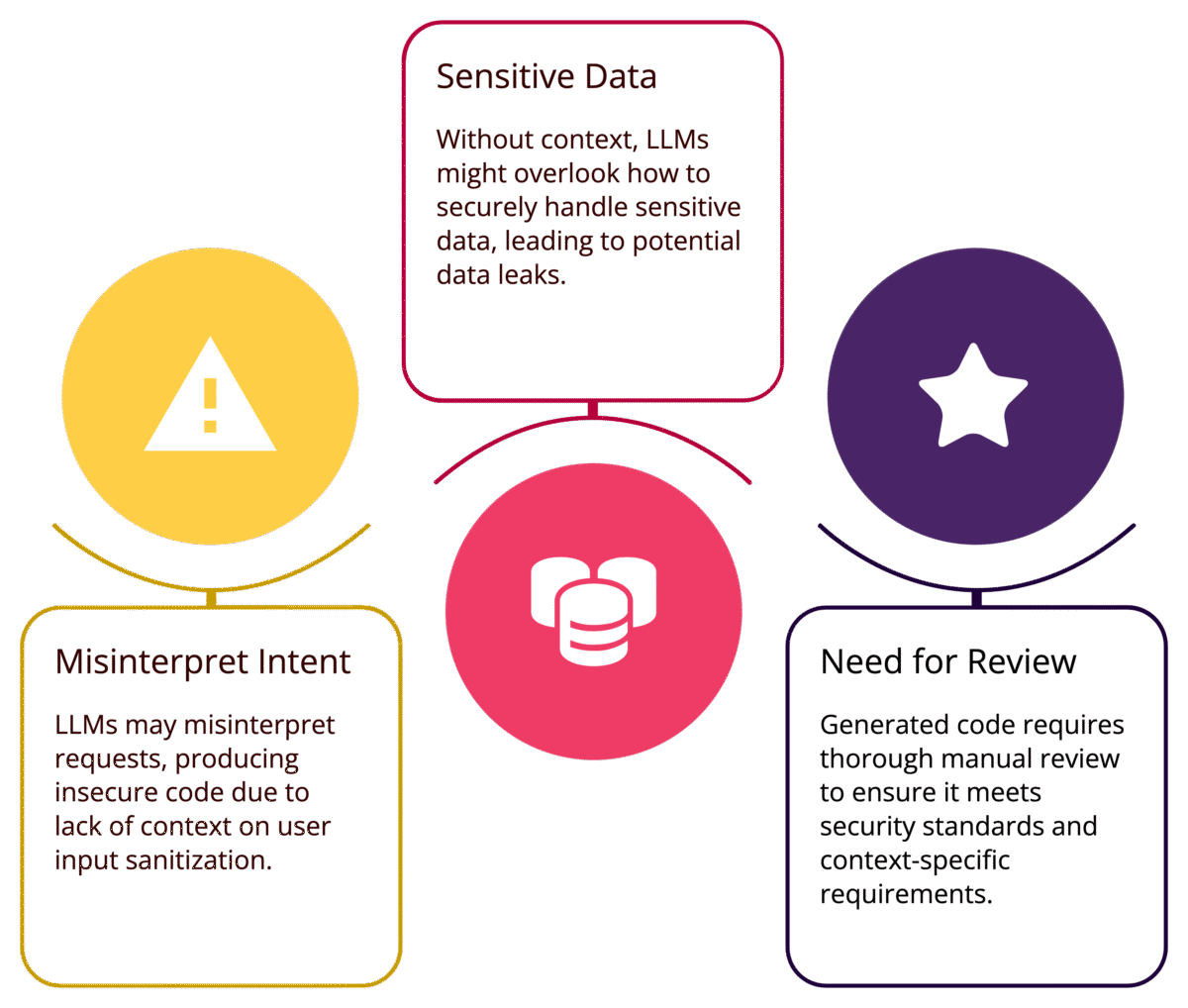

cursor.execute(query, (user_id,))2. Lack of Context

LLMs do not inherently understand the security context of the code they generate. They rely solely on the patterns in their training data without recognizing potential security implications. This lack of contextual awareness can manifest in several ways:

Misinterpreting Developer Intent: If a developer asks for code to handle user input without specifying how it should be sanitized, the AI may produce code that processes inputs directly without validation.

Overlooking Sensitive Data Handling: LLMs may generate code that handles sensitive data (e.g., passwords, tokens) without implementing proper encryption or access control measures.

Incorrect Assumptions: AI models may assume that certain inputs are safe or that a given code structure is secure, leading to potentially dangerous outputs.

Example:

An AI model might generate a password hashing function using the outdated MD5 algorithm:

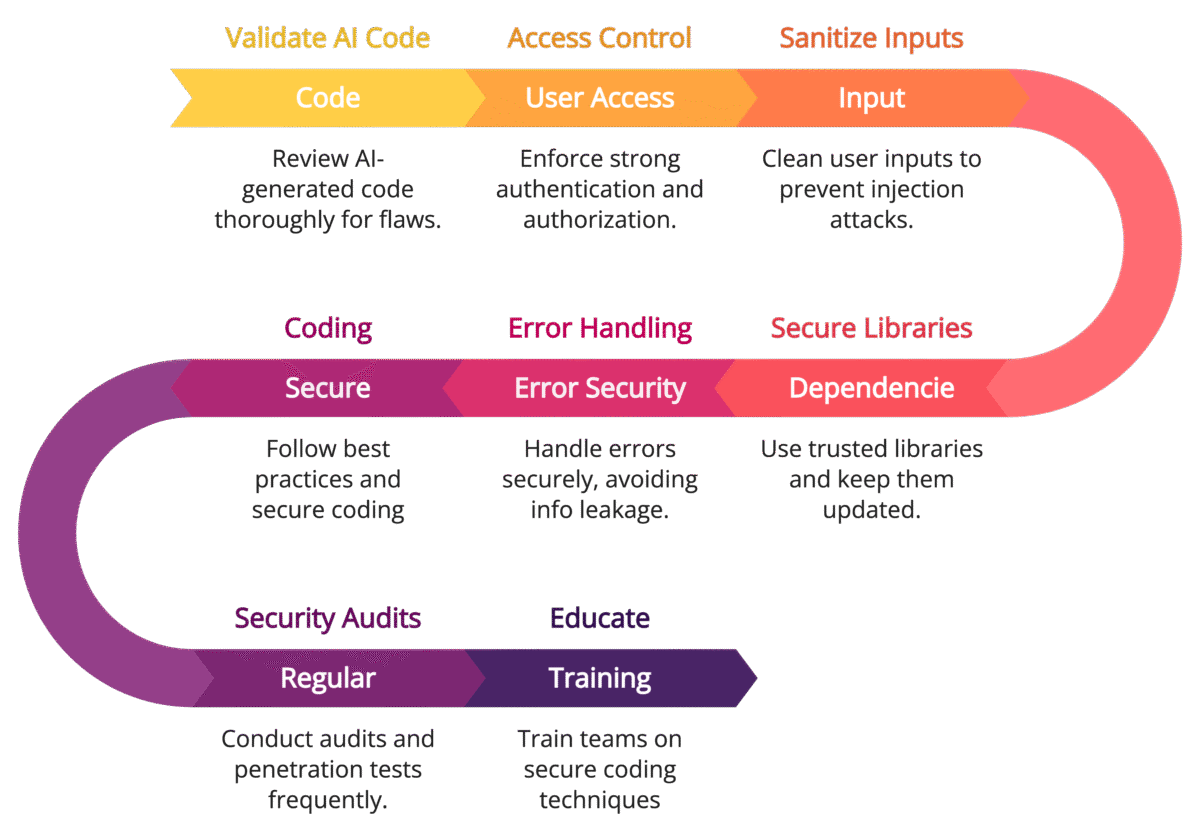

Best Practices for Writing Secure Code with AI LLMs

1. Validate AI-Generated Code Thoroughly

LLMs can produce functional code, but it is crucial to thoroughly review and validate it for security vulnerabilities. Implement the following steps:

Code Review: Inspect the generated code for common vulnerabilities like SQL injection, cross-site scripting (XSS), and buffer overflow.

Static Analysis Tools: Use tools like SonarQube, ESLint, and Bandit to detect potential security flaws.

Security Checklists: Verify against secure coding guidelines, such as the OWASP Top 10.

Example:

If the LLM generates a SQL query like:

def get_user_data(user_id):

query = f"SELECT * FROM users WHERE id = {user_id}"

cursor.execute(query)Replace it with a parameterized query to prevent SQL injection:

def get_user_data(user_id):

query = "SELECT * FROM users WHERE id = %s"

cursor.execute(query, (user_id,))2. Implement Access Control and Authentication

AI-generated code may overlook proper access control and authentication mechanisms. Ensure that:

API Keys and Secrets: Never hard-code sensitive information in AI-generated code. Use environment variables and secure storage mechanisms like AWS Secrets Manager.

Authorization Checks: Integrate role-based access control (RBAC) to restrict access to sensitive data and functions.

Token Expiry and Rotation: Apply token expiration policies and regularly rotate tokens to minimize potential breaches.

3. Sanitize User Inputs Effectively

User inputs are a common attack vector. LLM-generated code might not adequately sanitize inputs, leading to vulnerabilities like XSS and command injection.

Input Validation Libraries: Use libraries such as Validator.js for JavaScript or Cerberus for Python.

Escaping User Inputs: Ensure that inputs are properly escaped to prevent injection attacks.

Example:

Sanitizing user input in Python:

from html import escape

def sanitize_input(user_input):

return escape(user_input)4. Secure Dependencies and Libraries

LLMs may recommend libraries that are outdated or have known vulnerabilities. To mitigate risks:

Dependency Scanning: Use tools like Snyk, Dependabot, and npm audit to identify and fix vulnerable dependencies.

Version Management: Specify exact versions in

requirements.txtorpackage.jsonto prevent unintended upgrades that may introduce vulnerabilities.

5. Implement Secure Error Handling

LLMs may generate generic error-handling logic that exposes sensitive information. Ensure that:

Error Messages: Avoid disclosing internal server errors or stack traces to users.

Logging Practices: Log sensitive data securely using libraries like Loguru for Python or Winston for Node.js.

Example:

Implement secure error handling in Node.js:

app.use((err, req, res, next) => {

console.error(err.message);

res.status(500).send("An unexpected error occurred.");

});6. Apply Secure Coding Frameworks and Standards

Leverage established security frameworks and standards to maintain code integrity:

Secure Coding Guidelines: Follow the CWE/SANS Top 25.

Encryption Standards: Apply encryption for sensitive data using libraries like PyCryptodome or CryptoJS.

Compliance Checklists: Align with security standards like ISO/IEC 27001 and NIST.

7. Regular Security Audits and Penetration Testing

LLMs may not detect advanced attack vectors. Conduct regular security assessments to uncover potential vulnerabilities:

Penetration Testing Tools: Use tools like Burp Suite, OWASP ZAP, and Metasploit.

Code Audits: Integrate security-focused code audits within the CI/CD pipeline.

AI LLMs can significantly expedite software development, but they are not infallible. Ensuring secure code requires a proactive approach that combines AI-assisted development with robust security practices. By validating AI-generated code, implementing access controls, sanitizing inputs, and conducting regular security audits, developers can effectively mitigate risks and maintain code integrity.