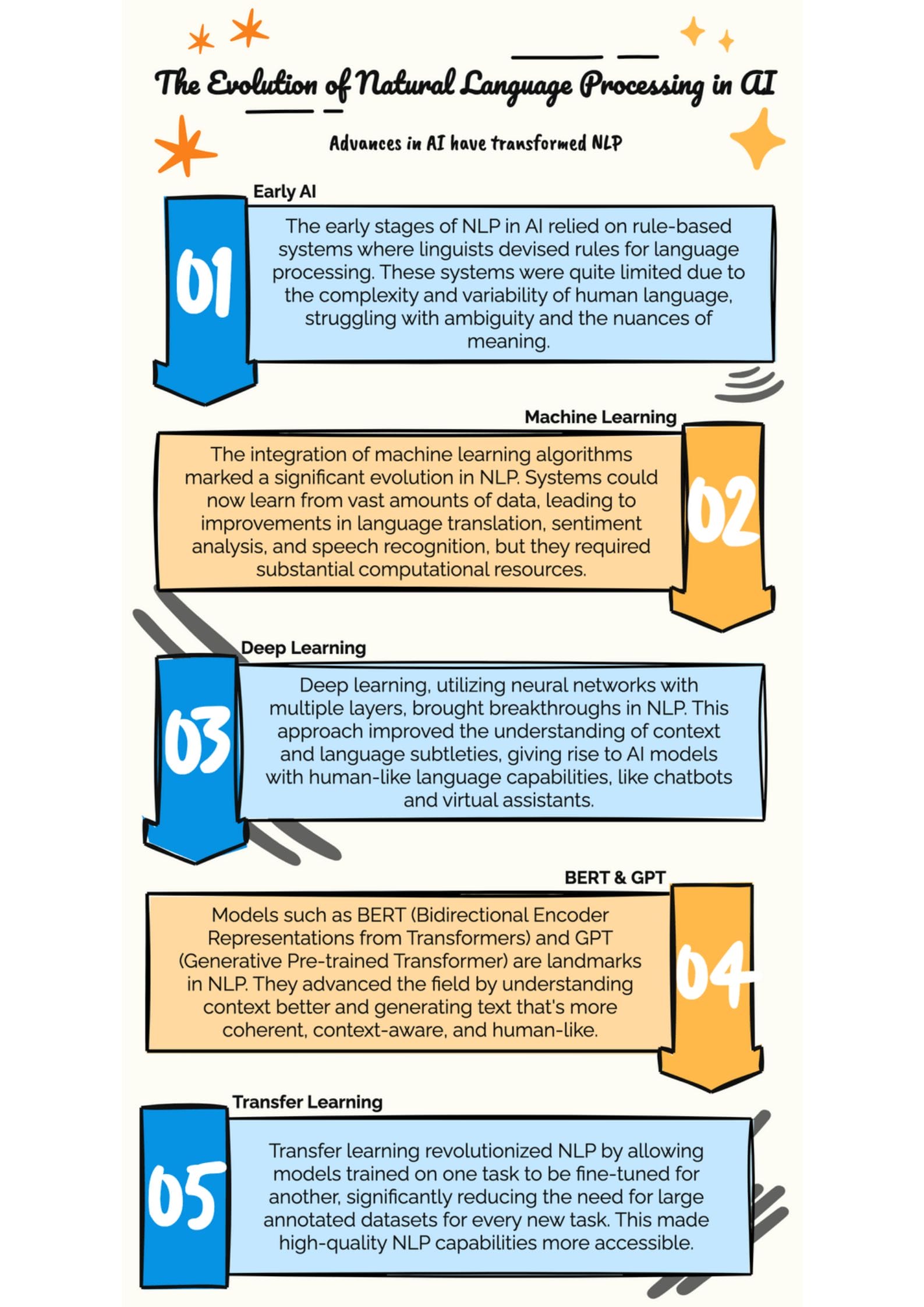

Imagine talking to your computer, and it understands you perfectly. Sounds like science fiction, right? But today, it’s more of a reality than ever before. Natural Language Processing (NLP), a subfield of artificial intelligence, has made incredible strides over the years. It’s transforming how we interact with technology, making it more intuitive and human-like.

The Dawn of Natural Language Processing

Back in the day, computers were glorified calculators. They could crunch numbers but struggled with human language. Early NLP was all about basic keyword matching. Think of it as a search engine that didn’t really understand what you wanted. For example, a simple query like “What’s the weather like today?” might have returned results with every document containing the words “weather” and “today,” but no actual weather forecast.

The limitations were clear. Computers couldn’t grasp context, nuance, or the intricacies of human language. This led to frustration and a lot of miscommunication between humans and machines.

The Rise of Machine Learning

Enter machine learning (ML). This was a game-changer for NLP. Instead of hard-coding rules for every possible sentence structure, ML allowed computers to learn from vast amounts of data. This meant they could start to understand context and make better predictions.

One early breakthrough was the development of the Hidden Markov Model (HMM). HMMs helped in tasks like speech recognition by considering the probability of sequences of words. For instance, it could predict that after hearing “Good morning,” the next likely word might be “everyone” or “sir.”

Code Example: Simple HMM for Part-of-Speech Tagging

Here’s a basic example of how an HMM might be used for part-of-speech tagging in Python:

import nltk

from nltk.tag import hmm

# Sample data

training_data = [

[('the', 'DT'), ('dog', 'NN'), ('barks', 'VB')],

[('a', 'DT'), ('cat', 'NN'), ('meows', 'VB')],

]

# Train the HMM

trainer = hmm.HiddenMarkovModelTrainer()

tagger = trainer.train(training_data)

# Tagging a sentence

sentence = ['the', 'cat', 'barks']

tagged = tagger.tag(sentence)

print(tagged)In this code, we train an HMM on a small set of tagged sentences. Then, we use it to tag a new sentence, showcasing the model’s ability to predict parts of speech.

The Deep Learning Revolution

While HMMs and other traditional models were a step forward, they still had limitations. They struggled with understanding long-range dependencies in language, like the relationship between words at the start and end of a sentence.

Deep learning changed the game. Neural networks, especially Recurrent Neural Networks (RNNs) and their advanced version, Long Short-Term Memory (LSTM) networks, allowed for better handling of sequences. These models could retain information over longer periods, improving understanding and predictions.

One significant leap was the introduction of the Transformer model. Transformers use a mechanism called attention, which allows the model to focus on different parts of the input text. This is like reading a book and being able to remember details from the first chapter while reading the last one.

Code Example: Transformer for Language Translation

Here’s a simple example using the popular Transformer model for language translation with the Hugging Face library:

from transformers import pipeline

# Load a pre-trained translation model

translator = pipeline("translation_en_to_fr")

# Translate a sentence

result = translator("Hello, how are you?")

print(result[0]['translation_text'])In this snippet, we use a pre-trained Transformer model to translate English text to French. The power of Transformers lies in their ability to handle complex language tasks with remarkable accuracy.

Applications of NLP Today

NLP is everywhere. From chatbots and virtual assistants to sentiment analysis and translation services, NLP applications are vast and varied. Virtual assistants like Siri and Alexa use NLP to understand and respond to user queries. Social media platforms leverage sentiment analysis to gauge public opinion. Translation services break down language barriers, making the world more connected.

Example: Chatbots in Customer Service

Consider chatbots in customer service. A few years ago, interacting with a chatbot was often a frustrating experience. They were limited to scripted responses and struggled with anything outside their narrow programming. Today, advanced NLP allows chatbots to understand and respond to a wide range of queries naturally.

For instance, if you ask a modern chatbot, “I need help with my order,” it can understand the context, pull up your order details, and provide relevant assistance. This not only improves customer satisfaction but also reduces the workload on human customer service agents.

Ethical Considerations in NLP

As we advance, it’s crucial to address ethical concerns. NLP models can inadvertently perpetuate biases present in their training data. This can lead to discriminatory outcomes, especially in sensitive applications like hiring or law enforcement. Ensuring fairness and transparency in NLP systems is vital.

The Future of NLP

Looking ahead, the future of NLP is exciting. With advancements in AI, we can expect even more sophisticated language understanding. One promising area is zero-shot learning, where models can perform tasks they were never explicitly trained on. Imagine a chatbot that can handle new, unseen questions seamlessly. This capability could revolutionize how we interact with technology, making it far more adaptable and efficient.

Zero-Shot Learning

Zero-shot learning (ZSL) is a fascinating leap in AI where models are capable of performing tasks without having been explicitly trained on them. It relies on generalizing from known data to new, unseen situations. For instance, a zero-shot model trained to understand and generate text could potentially answer questions on topics it hasn’t encountered before, leveraging its ability to understand context and semantics from its training.

Consider this scenario: You ask a chatbot a question about a newly released smartphone model that it hasn’t been specifically trained on. Using zero-shot learning, the chatbot can utilize its understanding of previous smartphone models and general tech knowledge to provide a coherent and relevant answer. This reduces the need for continuous retraining and updating, making AI applications more robust and versatile.

Code Example: Zero-Shot Classification

Here’s an example using Hugging Face’s transformers library to perform zero-shot classification:

from transformers import pipeline

# Initialize the zero-shot classification pipeline

classifier = pipeline("zero-shot-classification")

# Define the sequence to classify and candidate labels

sequence_to_classify = "I recently purchased the latest iPhone and it's fantastic."

candidate_labels = ["technology", "sports", "politics"]

# Perform zero-shot classification

result = classifier(sequence_to_classify, candidate_labels)

print(result)In this code, the model classifies a text about a new iPhone under the appropriate category (technology) without being explicitly trained on this specific sequence.

Multimodal Integration

Another exciting development is the integration of NLP with other AI fields, such as computer vision. This could lead to more immersive and interactive experiences. For example, an AI system could analyze images and generate detailed descriptions, making content more accessible to visually impaired individuals.

Imagine a scenario where an AI assistant can not only read and understand your text but also analyze accompanying images. For instance, if you upload a photo of a sunset, the AI could generate a poetic description, enhancing the overall user experience. This integration opens up a multitude of applications across various industries, from enhanced virtual assistants to sophisticated surveillance systems.

Code Example: Multimodal AI with Image Captioning

Here’s an example using a multimodal AI model for image captioning:

from transformers import VisionEncoderDecoderModel, ViTFeatureExtractor, AutoTokenizer

import torch

from PIL import Image

# Load pre-trained model and tokenizer

model = VisionEncoderDecoderModel.from_pretrained("nlpconnect/vit-gpt2-image-captioning")

feature_extractor = ViTFeatureExtractor.from_pretrained("nlpconnect/vit-gpt2-image-captioning")

tokenizer = AutoTokenizer.from_pretrained("nlpconnect/vit-gpt2-image-captioning")

# Load and preprocess the image

image = Image.open("path_to_image.jpg")

pixel_values = feature_extractor(images=image, return_tensors="pt").pixel_values

# Generate caption

output_ids = model.generate(pixel_values, max_length=16, num_beams=4)

caption = tokenizer.decode(output_ids[0], skip_special_tokens=True)

print(caption)In this code, a pre-trained model generates a caption for a given image, demonstrating the synergy between NLP and computer vision.

Personalization and Context Awareness

The future of NLP will also focus on personalization and context awareness. Future NLP models will not only understand general language but will also adapt to individual users’ preferences and contexts. For example, an AI-powered virtual assistant could learn your unique speaking style, preferred topics, and even your mood, providing responses that feel genuinely personalized.

Consider an AI that assists with mental health. By understanding the context of your conversations over time, it could offer more tailored advice, recognize patterns in your mood, and even alert you or your caregivers to potential issues.

Conversational Agents

With these advancements, conversational agents will become more sophisticated. They will be able to handle multi-turn dialogues, remember past interactions, and understand the subtleties of human conversation. This will make interactions with AI feel more natural and human-like.

For instance, a future customer service chatbot could handle complex queries over multiple messages, remembering details from previous interactions and providing consistent, accurate support. This will significantly enhance user satisfaction and operational efficiency.

Wrapping up

The evolution of NLP is a testament to the incredible progress in AI. From rudimentary keyword matching to sophisticated deep learning models, NLP has come a long way. It’s transforming how we interact with technology, making it more intuitive and human-like.

As we continue to push the boundaries, the potential applications are limitless. Whether it’s improving customer service, enhancing accessibility, or breaking down language barriers, NLP is at the forefront of making technology more human.

Source Links

https://www.ironhack.com/us/en/blog/beyond-siri-the-evolution-of-natural-language-processing-in-ai

https://datasaur.ai/blog-posts/the-evolution-of-nlp

https://www.analyticsvidhya.com/blog/2022/07/the-evolution-of-nlp-from-1950-to-2022/